- #Benchmark cpu gpu neural network training drivers#

- #Benchmark cpu gpu neural network training code#

- #Benchmark cpu gpu neural network training download#

Assume that the total volume and the number of threads used for transfer is roughly the same for the large and the small files. One of the primary reasons for this is the read/write block size.

#Benchmark cpu gpu neural network training download#

For instance, if you have 2,000,000 files, where each file is 5 KB (total size = 10 GB = 2,000,000 X 5 * 1024), downloading these many tiny files can take a few hours, compared to a few minutes when downloading 2,000 files each 5 MB in size (total size = 10 GB = 2,000 X 5 * 1024 * 1024 ), even though the total download size is the same. One of the first steps that Amazon SageMaker does is download the files from Amazon S3, which is the default input mode called File mode.ĭownloading or uploading very small files, even in parallel, is slower than larger files totaling up to the same size. This includes data from application databases extracted through an ETL process into a JSON or CSV format or image files. You can store large amounts of data in Amazon S3 at low cost. downloading data from Amazon S3, use of file systems such as Amazon EBS & Amazon Elastic File System (Amazon EFS). In this section, we look at tips to optimize data transfer via network operations, e.g. Optimizing data download over the network The following sections look at optimizing these steps. Data transfer into GPU memory – Copy the processed data from the CPU memory into the GPU memory.These operations might include converting images or text to tensors or resizing images. Data preprocessing – The CPU generally handles any data preprocessing such as conversion or resizing.

Amazon Elastic Block Store (Amazon EBS) volumes aren’t local resources, and involve network operations. Local disk refers to an instance store, where storage is located on disks that are physically attached to the host computer.

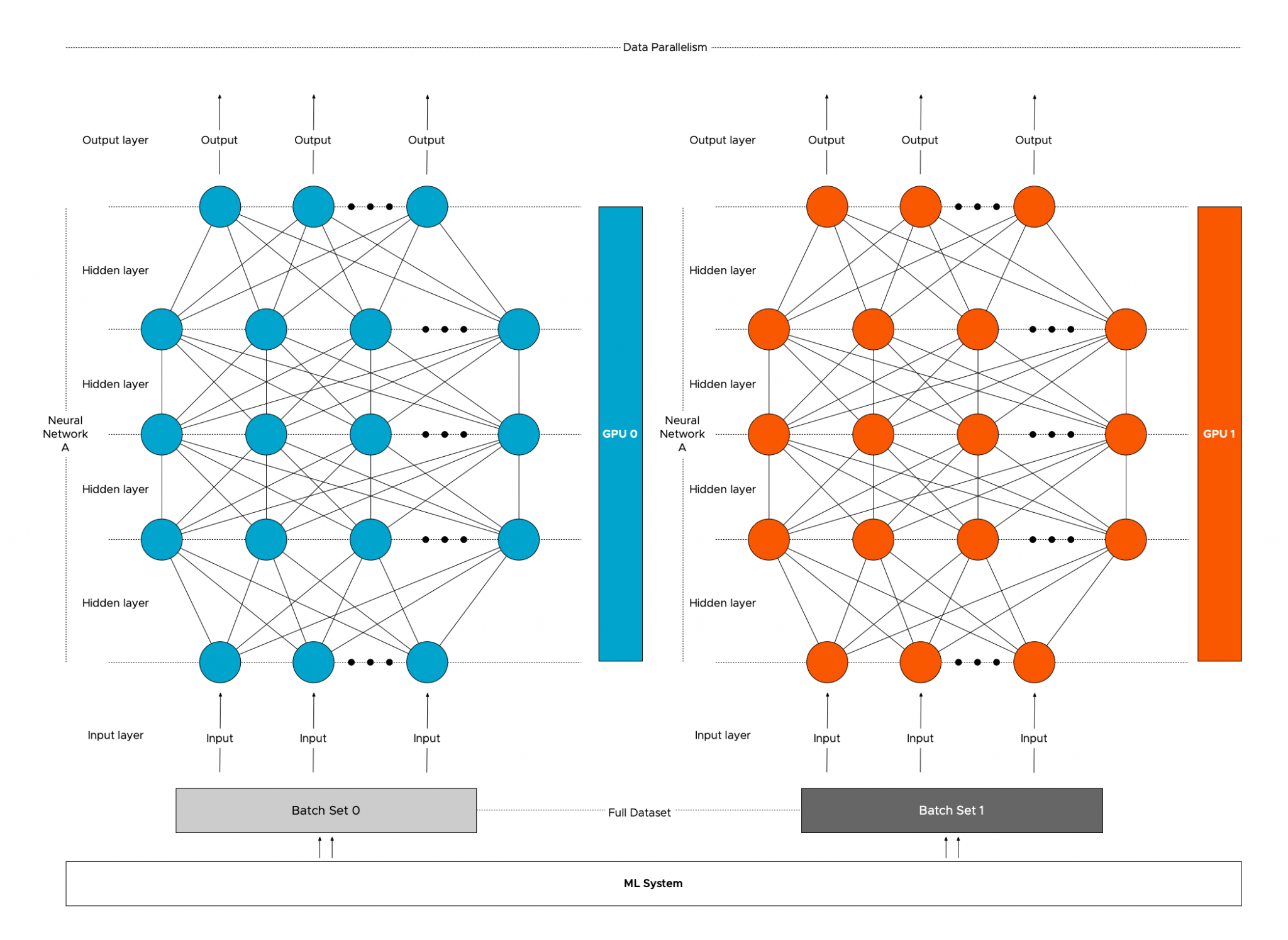

Disk I/O – Read data from local disk into CPU memory.Network operations – Download the data from Amazon Simple Storage Service (Amazon S3).The general steps usually involved in getting the data into the GPU memory are the following: The following diagram illustrates the architecture of optimizing I/O. The challenge is to optimize I/O or the network operations in such a way that the GPU never has to wait for data to perform its computations. The faster you load data into GPU, the quicker it can perform its operation. For GPUs to perform these operations, the data must be available in the GPU memory. The basicsĪ single GPU can perform tera floating point operations per second (TFLOPS), which allows them to perform operations 10–1,000 times faster than CPUs. You can typically see performance improvements up to 10-fold in overall GPU training by just optimizing I/O processing routines. In this post, we focus on general techniques for improving I/O to optimize GPU performance when training on Amazon SageMaker, regardless of the underlying infrastructure or deep learning framework. Optimizing communication between GPUs during multi-GPU or distributed trainingĪmazon SageMaker is a fully managed service that enables developers and data scientists to quickly and easily build, train, and deploy machine learning (ML) models at any scale.Optimizing I/O and network operations to make sure that the data is fed to the GPU at the rate that matches its computations.

#Benchmark cpu gpu neural network training drivers#

Using the latest high performant libraries and GPU drivers.

#Benchmark cpu gpu neural network training code#

0 kommentar(er)

0 kommentar(er)